什么是 Kubernetes?

在本文中,我们将回顾 Kubernetes 和 Kubeadm 是什么,如何使用 Kubeadm 安装、创建集群和设置工作节点。 如果您还不熟悉 Kubernetes,我们建议您阅读我们关于 Kubernetes 基础知识的文章。

Kubernetes(或俗称的 K8s)是一个开源系统,用于自动化部署、扩展和管理容器化应用程序。 Kubernetes 的一些好处包括:

- 系统的自动部署和回滚。 Kubernetes 会逐渐更改应用程序或其配置,同时监控其运行状况以确保它不会同时破坏所有实例。 如果出现问题,Kubernetes 会为我们回滚更改。

- 服务发现和负载均衡。 Kubernetes 为 Pod 提供一组 Pod 的 IP 地址和 DNS 名称,并可以在它们之间分配负载。

- 编排存储库。 用户可以自动挂载本地或云存储系统。

- 自动容器包装。 Kubernetes 会根据容器的资源需求和其他限制条件自动分配容器。 容器及其资源分配得越好,系统性能就会越好。

- 在 Kubernetes 中使用包。 除了服务之外,Kubernetes 还可以管理您的包设置。

- 自我恢复。 Kubernetes 监控容器的状态,如果出现问题,它可以用新容器替换它们。 此外,已经损坏的容器会重新创建。

- 库贝德姆。 它自动安装和配置 Kubernetes 组件,包括 API 服务器、控制器管理器和 Kube DNS。

什么是 Kubeadm?

Kubeadm 是 Kubernetes 发行版附带的一个工具包,它提供了在现有基础架构中构建集群的最佳实践方法。

它执行启动和运行最小可行集群所需的步骤。

先决条件

服务器配置

为了安装 Kubernetes,我们使用了三台具有以下系统规格的服务器。

- 主服务器 – 4 CPU 和 4096 RAM

- Worker1 服务器 – 2 CPU 和 4096 RAM

- Worker2 服务器 – 2 CPU 和 4096 RAM

所有服务器都使用 Ubuntu 18.04,每个服务器都使用以下 IP 和 DNS。

- 主服务器 – IP 192.168.50.58

- Worker1 服务器 – IP 192.168.50.38

- Worker2 服务器 – IP 192.168.50.178

更新

第一项任务是确保我们所有的包都在每台服务器上更新。

[email protected]:~# apt update -y && apt upgrade -y添加主机文件信息

现在我们将登录到每台机器并使用此命令编辑 /etc/hosts 配置文件。

[email protected]:~# tee -a /etc/hosts< 192.168.50.58 master > 192.168.50.38 worker1 > 192.168.50.178 worker2 > EOF 192.168.50.58 master 192.168.50.38 worker1 192.168.50.178 worker2 [email protected]:~#现在我们可以验证主机文件中的更改。

[email protected]:~# cat /etc/hosts 127.0.0.1 localhost 127.0.1.1 host ::1 ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 192.168.50.58 master 192.168.50.38 worker1 192.168.50.178 worker2 [email protected]:~#禁用交换内存

要使 kubelet 正常工作,必须禁用 SWAP 内存。 此更改应用于每个服务器。 硬盘的分页空间用于在 RAM 空间不足时临时存储数据。 我们使用以下命令完成此操作。

[email protected]:~# sed -i '/ swap / s/^(.*)$/#1/g' /etc/fstab [email protected]:~# swapoff -a安装 Kubelet、Beadm 和 Kubectl

接下来,我们选择要安装的组件。 在这种情况下,我们将从以下元素开始。

- 库贝莱特 – 这是一个系统服务,运行在所有节点上,配置集群的各种组件。

- Kubeadm – 安装和配置各种集群组件的命令行工具。

- Kubectl – 这是一个命令行工具,用于通过 API 向集群发送命令。 它还使在终端中使用命令变得更加容易。

添加存储库

使用以下命令,我们将向包管理器添加额外的存储库并针对它们运行密钥检查。 我们首先安装 apt-transport-https 以将我们的外部 HTTPS 源安全地添加到 apt 源列表中。 然后我们将安装 kubelet、kubeadm 和 kubectl。 首先,我们将演示在主服务器上的初始安装。 首先,我们将安装 apt-transport-https。

[email protected]:~# apt-get update && apt-get install -y [email protected]:~# apt-transport-https curl接下来,我们添加 Kubernetes 存储库和密钥以验证所有内容都已安全安装。

[email protected]:~# curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | apt-key add - OK [email protected]:~#现在,我们将添加我们的存储库。

[email protected]:~# echo "deb https://apt.kubernetes.io/ kubernetes-xenial main" | tee /etc/apt/sources.list.d/kubernetes.list deb https://apt.kubernetes.io/ kubernetes-xenial main [email protected]:~#安装

我们现在可以再次更新服务器以识别我们的新存储库,然后安装软件包。

[email protected]:~# apt update [email protected]:~# apt -y install vim git curl wget kubelet kubeadm kubectl设置模式

我们将 kubelet 设置为待机模式,因为它每隔几秒就会重新启动,因为它处于待机循环并等待进一步的操作。

[email protected]:~# apt-mark hold kubelet kubeadm kubectl kubelet set on hold. kubeadm set on hold. kubectl set on hold. [email protected]:~#验证安装

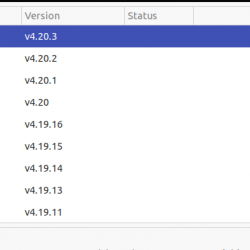

现在我们使用以下命令检查我们安装的组件版本。

[email protected]:~# kubectl version --client && kubeadm version Client Version: version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:50:19Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"linux/amd64"} kubeadm version: &version.Info{Major:"1", Minor:"19", GitVersion:"v1.19.3", GitCommit:"1e11e4a2108024935ecfcb2912226cedeafd99df", GitTreeState:"clean", BuildDate:"2020-10-14T12:47:53Z", GoVersion:"go1.15.2", Compiler:"gc", Platform:"linux/amd64"} [email protected]:~#配置防火墙

接下来,我们配置 iptables 以允许通过网桥的流量。 此更改至关重要,因为 iptables(服务器默认防火墙)应始终检查通过连接的流量。 现在我们将加载 br_netfilter 附加模块。

[email protected]:~# modprobe overlay [email protected]:~# modprobe br_netfilter在 K8s 的 sysctl 配置中,我们赋值为 1,表示检查流量。

[email protected]:~# tee /etc/sysctl.d/kubernetes.conf< net.bridge.bridge-nf-call-ip6tables = 1 > net.bridge.bridge-nf-call-iptables = 1 > net.ipv4.ip_forward = 1 > EOF net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 [email protected]:~#现在,重新加载 sysctl。

[email protected]:~# sysctl --system * Applying /etc/sysctl.d/10-console-messages.conf ... kernel.printk = 4 4 1 7 * Applying /etc/sysctl.d/10-ipv6-privacy.conf ... net.ipv6.conf.all.use_tempaddr = 2 net.ipv6.conf.default.use_tempaddr = 2 * Applying /etc/sysctl.d/10-kernel-hardening.conf ... kernel.kptr_restrict = 1 * Applying /etc/sysctl.d/10-link-restrictions.conf ... fs.protected_hardlinks = 1 fs.protected_symlinks = 1 * Applying /etc/sysctl.d/10-magic-sysrq.conf ... kernel.sysrq = 176 * Applying /etc/sysctl.d/10-network-security.conf ... net.ipv4.conf.default.rp_filter = 1 net.ipv4.conf.all.rp_filter = 1 net.ipv4.tcp_syncookies = 1 * Applying /etc/sysctl.d/10-ptrace.conf ... kernel.yama.ptrace_scope = 1 * Applying /etc/sysctl.d/10-zeropage.conf ... vm.mmap_min_addr = 65536 * Applying /usr/lib/sysctl.d/50-default.conf ... net.ipv4.conf.all.promote_secondaries = 1 net.core.default_qdisc = fq_codel * Applying /etc/sysctl.d/99-sysctl.conf ... * Applying /etc/sysctl.d/kubernetes.conf ... net.bridge.bridge-nf-call-ip6tables = 1 net.bridge.bridge-nf-call-iptables = 1 net.ipv4.ip_forward = 1 * Applying /etc/sysctl.conf ... [email protected]:~#安装 Docker

Docker 是一个容器运行时产品,它使用操作系统级虚拟化来启动我们的容器。 这些容器彼此分离,包含运行应用程序所需的软件、库和配置文件。 下一步是将存储库添加到包管理器并检查密钥。 提醒一下,我们必须在每台服务器上完成这些任务。

首先,我们将再次使用 apt 运行快速更新。 然后,我们将安装 gnupg2 包,然后添加 gpg 密钥。 最后,我们添加 Docker 存储库,再次更新服务器,并安装 docker-ce(社区版)。

[email protected]:~# apt update [email protected]:~# apt install -y curl gnupg2 [email protected]:~# curl -fsSL https://download.docker.com/linux/ubuntu/gpg | apt-key add - OK [email protected]:~# add-apt-repository "deb [arch=amd64] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" [email protected]:~# apt update [email protected]:~# apt install -y containerd.io docker-ce创建目录和配置

接下来,我们将编写 Docker 配置,以便它可以在后台运行。

[email protected]:~# mkdir -p /etc/systemd/system/docker.service.d [email protected]:~# tee /etc/docker/daemon.json < { > "exec-opts": ["native.cgroupdriver=systemd"], > "log-driver": "json-file", > "log-opts": { > "max-size": "100m" > }, > "storage-driver": "overlay2" > } > EOF { "exec-opts": ["native.cgroupdriver=systemd"], "log-driver": "json-file", "log-opts": { "max-size": "100m" }, "storage-driver": "overlay2" } [email protected]:~#重启 Docker

现在,我们重新加载、重新启动,然后启用 Docker 守护程序。

[email protected]:~# systemctl daemon-reload [email protected]:~# systemctl restart docker [email protected]:~# systemctl enable docker Synchronizing state of docker.service with SysV service script with /lib/systemd/systemd-sysv-install. Executing: /lib/systemd/systemd-sysv-install enable docker [email protected]:~#验证 Docker 状态

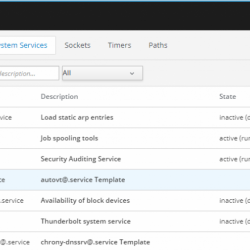

现在我们可以验证 Docker 是否已启动并正在运行。

[email protected]:~# systemctl status docker ● docker.service - Docker Application Container Engine Loaded: loaded (/lib/systemd/system/docker.service; enabled; vendor preset: Active: active (running) since Fri 2020-10-23 19:31:23 +03; 1min 6s ago Docs: https://docs.docker.com Main PID: 16856 (dockerd) Tasks: 13 CGroup: /system.slice/docker.service └─16856 /usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.570268584+03:00" Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.570272311+03:00" Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.570275888+03:00" Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.570389541+03:00" Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.626550911+03:00" Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.650814097+03:00" Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.661596420+03:00" Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.661656042+03:00" Oct 23 19:31:23 host dockerd[16856]: time="2020-10-23T19:31:23.671493768+03:00" Oct 23 19:31:23 host systemd[1]: Started Docker Application Container Engine. lines 1-19/19 (END)创建主服务器

首先,我们需要确保使用以下命令加载 br_netfilter 模块。

[email protected]:~# lsmod | grep br_netfilter br_netfilter 28672 0 bridge 176128 1 br_netfilter [email protected]:~#需要 br_netfilter 内核模块来启用跨集群的 Kubernetes pod 之间的桥接流量。 它允许集群成员看起来好像直接相互连接。

启动 Kubelet

接下来,我们将启用 kubelet,然后初始化服务器以运行 K8s 管理组件,例如 etcd(集群数据库)和 API 服务器。

[email protected]:~# systemctl enable kubelet [email protected]:~# kubeadm config images pull [config / images] Pulled k8s.gcr.io/kube-apiserver:v1.19.3 [config / images] Pulled k8s.gcr.io/kube-controller-manager:v1.19.3 [config / images] Pulled k8s.gcr.io/kube-scheduler:v1.19.3 [config / images] Pulled k8s.gcr.io/kube-proxy:v1.19.3 [config / images] Pulled k8s.gcr.io/pause:3.2 [config / images] Pulled k8s.gcr.io/etcd:3.4.13-0 [config / images] Pulled k8s.gcr.io/coredns:1.7.0 [email protected]:~# 创建集群

现在我们将使用以下参数通过 kubeadm 命令创建集群。

- –pod-network-cidr — 用于配置网络和设置 CIDR 范围(无类别域间路由),这是一种无类别 IP 寻址方法。

- –control-plane-endpoint — 如果在高可用性集群中使用,这是所有节点的一组通用控制端点。

[email protected]:~# kubeadm init > --pod-network-cidr=10.0.0.0/16 > --control-plane-endpoint=master W1023 21:29:58.178002 9474 configset.go:348] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io] [init] Using Kubernetes version: v1.19.3 [preflight] Running pre-flight checks [preflight] Pulling images required for setting up a Kubernetes cluster [preflight] This might take a minute or two, depending on the speed of your internet connection [preflight] You can also perform this action in beforehand using 'kubeadm config images pull' [certs] Using certificateDir folder "/etc/kubernetes/pki" [certs] Generating "ca" certificate and key [certs] Generating "apiserver" certificate and key [certs] apiserver serving cert is signed for DNS names [host kubernetes kubernetes.default kubernetes.default.svc kubernetes.default.svc.cluster.local master] and IPs [10.96.0.1 192.168.50.58] [certs] Generating "apiserver-kubelet-client" certificate and key [certs] Generating "front-proxy-ca" certificate and key [certs] Generating "front-proxy-client" certificate and key [certs] Generating "etcd/ca" certificate and key [certs] Generating "etcd/server" certificate and key [certs] etcd/server serving cert is signed for DNS names [host localhost] and IPs [192.168.50.58 127.0.0.1 ::1] [certs] Generating "etcd/peer" certificate and key [certs] etcd/peer serving cert is signed for DNS names [host localhost] and IPs [192.168.50.58 127.0.0.1 ::1] [certs] Generating "etcd/healthcheck-client" certificate and key [certs] Generating "apiserver-etcd-client" certificate and key [certs] Generating "sa" key and public key [kubeconfig] Using kubeconfig folder "/etc/kubernetes" [kubeconfig] Writing "admin.conf" kubeconfig file [kubeconfig] Writing "kubelet.conf" kubeconfig file [kubeconfig] Writing "controller-manager.conf" kubeconfig file [kubeconfig] Writing "scheduler.conf" kubeconfig file [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Starting the kubelet [control-plane] Using manifest folder "/etc/kubernetes/manifests" [control-plane] Creating static Pod manifest for "kube-apiserver" [control-plane] Creating static Pod manifest for "kube-controller-manager" [control-plane] Creating static Pod manifest for "kube-scheduler" [etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests" [wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s [apiclient] All control plane components are healthy after 13.004870 seconds [upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace [kubelet] Creating a ConfigMap "kubelet-config-1.19" in namespace kube-system with the configuration for the kubelets in the cluster [upload-certs] Skipping phase. Please see --upload-certs [mark-control-plane] Marking the node host as control-plane by adding the label "node-role.kubernetes.io/master=""" [mark-control-plane] Marking the node host as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule] [bootstrap-token] Using token: bf6w4x.t6l461giuzqazuy2 [bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes [bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials [bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token [bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster [bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace [kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key [addons] Applied essential addon: CoreDNS [addons] Applied essential addon: kube-proxy Your Kubernetes control-plane has initialized successfully!要开始使用我们的集群,我们需要允许并配置我们的用户运行 kubectl。

[email protected]:~# mkdir -p $HOME/.kube [email protected]:~# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config [email protected]:~# chown $(id -u):$(id -g) $HOME/.kube/config我们现在应该能够将 pod 网络部署到集群中。

[email protected]:~# kubectl apply -f [podnetwork].yaml" with one of the options listed at: https://kubernetes.io/docs/concepts/cluster-administration/addons/现在我们可以通过复制每个节点上的证书颁发机构和服务帐户密钥,然后以 root 身份运行以下命令来连接任意数量的控制平面节点。

[email protected]:~# kubeadm join master:6443 --token bf6w4x.t6l461giuzqazuy2 --discovery-token-ca-cert-hash sha256:8d0b3721ad93a24bb0bb518a15ea657d8b9b0876a76c353c445371692b7d064e --control-plane下一个命令允许我们通过以 root 身份在每个节点上运行以下命令来加入任意数量的工作节点。

[email protected]:~# kubeadm join master:6443 --token bf6w4x.t6l461giuzqazuy2 --discovery-token-ca-cert-hash sha256:8d0b3721ad93a24bb0bb518a15ea657d8b9b0876a76c353c445371692b7d064e [email protected]:~#发现令牌

命令的最终输出是添加其他主服务器所需的唯一令牌。 如果我们需要添加它们,我们可以使用这个命令来完成。

kubeadm join master:6443 --token bf6w4x.t6l461giuzqazuy2 --discovery-token-ca-cert-hash sha256:8d0b3721ad93a24bb0bb518a15ea657d8b9b0876a76c353c445371692b7d064e --control-plane配置 Kubectl

现在我们可以使用以下命令开始配置 kubectl。

[email protected]:~# mkdir -p $ HOME / .kube [email protected]:~# cp -i /etc/kubernetes/admin.conf $ HOME / .kube / config [email protected]:~# chown $ (id -u): $ (id -g) $ HOME / .kube / config检查集群状态

接下来,我们检查集群的状态。

[email protected]:~# kubectl cluster-info Kubernetes master is running at https://master:6443 KubeDNS is running at https://master:6443/api/v1/namespaces/kube-system/services/kube-dns:dns/proxy To further debug and diagnose cluster problems, we use the 'kubectl cluster-info dump command. [email protected]:~#安装印花布

我们现在将安装和配置 Calico 插件。 这个插件是一个基于主机的网络插件,用于虚拟机用于安全目的的容器。 我们使用以下命令开始安装。

[email protected]:~# kubectl apply -f https://docs.projectcalico.org/manifests/calico.yaml configmap/calico-config created customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created clusterrole.rbac.authorization.k8s.io/calico-kube-controllers created clusterrolebinding.rbac.authorization.k8s.io/calico-kube-controllers created clusterrole.rbac.authorization.k8s.io/calico-node created clusterrolebinding.rbac.authorization.k8s.io/calico-node created daemonset.apps/calico-node created serviceaccount/calico-node created deployment.apps/calico-kube-controllers created serviceaccount/calico-kube-controllers created [email protected]:~#验证正在运行的 Pod

这个命令允许我们检查工作的 Pod。

[email protected]:~# watch kubectl get pods --all-namespaces NAMESPACE NAME READY STATUS RESTARTS AGE kube-system calico-kube-controllers-7d569d95-wfzjp 1/1 Running 0 2m52s kube-system calico-node-jd5l6 1/1 Running 0 2m52s kube-system coredns-f9fd979d6-hb4bt 1/1 Running 0 7m43s kube-system coredns-f9fd979d6-tpbx9 1/1 Running 0 7m43s kube-system etcd-host 1/1 Running 0 7m58s kube-system kube-apiserver-host 1/1 Running 0 7m58s kube-system kube-controller-manager-host 1/1 Running 0 7m58s kube-system kube-proxy-gvd5x 1/1 Running 0 7m43s kube-system kube-scheduler-host 1/1 Running 0 7m58s [email protected]:~#验证配置

我们做的最后一项检查是验证主服务器是否准备就绪且可用。

root @ host: ~ # kubectl get nodes -o wide NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME host Ready master 46m v1.19.3 192.168.50.58 Ubuntu 18.04.5 LTS 5.4.0-52-generic docker: //19.3.13 root @ host: ~ #创建工作节点

设置 Master 后,我们将工作节点添加到集群中,以便 K8s 可以处理负载。 要将 Workers 添加到集群,我们使用该命令和我们之前在创建集群时收到的令牌。

添加工人 1

[email protected]:~# kubeadm join master:6443 --token bf6w4x.t6l461giuzqazuy2 > --discovery-token-ca-cert-hash sha256:8d0b3721ad93a24bb0bb518a15ea657d8b9b0876a76c353c445371692b7d064e [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver, and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. [email protected]:~#添加工人 2

[email protected]:~# kubeadm join master:6443 --token bf6w4x.t6l461giuzqazuy2 > --discovery-token-ca-cert-hash sha256:8d0b3721ad93a24bb0bb518a15ea657d8b9b0876a76c353c445371692b7d064e [preflight] Running pre-flight checks [preflight] Reading configuration from the cluster... [preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml' [kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml" [kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env" [kubelet-start] Starting the kubelet [kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap... This node has joined the cluster: * Certificate signing request was sent to apiserver, and a response was received. * The Kubelet was informed of the new secure connection details. Run 'kubectl get nodes' on the control-plane to see this node join the cluster. [email protected]:~#验证集群成员

现在在主服务器上,运行以下命令来检查 Worker 1 和 2 是否都已添加到集群中。

[email protected]:~# kubectl get nodes NAME STATUS ROLES AGE VERSION host Ready master 55m v1.19.3 host-worker-1 Ready 4m48s v1.19.3 host-worker-2 Ready 3m5s v1.19.3 [email protected]:~#添加应用程序

接下来,我们将应用程序部署到集群,检查其性能,并启动测试应用程序。

[email protected]:~# kubectl apply -f https://k8s.io/examples/pods/commands.yaml pod / command-demo created [email protected]:~#验证 Pod 状态

最后,我们使用下面的命令来检查pod是否已经启动。

[email protected]:~# kubectl get pods NAME READY STATUS RESTARTS AGE command-demo 0/1 Completed 0 30s [email protected]:~#这样就完成了我们对Kubernetes的安装和配置。

结论

在本教程中,我们学习了如何在生产环境中安装和配置 Kubernetes。 我们还演示了如何使用 kubelet、kubeadm、kubectl,并配置 admin 用户。

立即预订您的服务器!

我们以成为 Hosting™ 中最有帮助的人而自豪!

我们的支持团队由经验丰富的 Linux 技术人员和才华横溢的系统管理员组成,他们对多种网络托管技术(尤其是本文中讨论的技术)有着深入的了解。

如果您对此信息有任何疑问,我们随时可以回答与本文相关的任何问题,一年 365 天,一周 7 天,一天 24 小时。

如果您是完全托管的 VPS 服务器, Cloud 专用,VMWare 私有 Cloud, 私有父服务器, 托管 Cloud 服务器或专用服务器所有者,并且您对执行列出的任何步骤感到不舒服,可以通过电话联系我们 @800.580.4985,a 聊天 或支持票以协助您完成此过程。